by Suraj

Uttamchandani, Tristan Tager, and Daniel Hickey

We discuss our recent pilot of networked online approaches for providing supplemental instruction for learners in Calculus. This work responds to the raging debate over how to respond to underprepared college entrants who are at high risk for failing "gateway" introductory courses, particularly in STEM and composition. Placing such students in remedial Developmental Education (DE) courses generates tuition revenue and fits within the existing course structure. But decades of research show that this approach does not work and that many of these student never move from this "remedial ghetto" into credit-bearing courses, and fewer still go on to graduate. Most now argue for providing peer-assisted Supplemental Instruction (SI) to support all students in challenging gateway courses. But because SI does not generate tuition, it draws from existing instructional resources. Additionally, SI requires space, scheduling, and supervision that many schools struggle to sustain. In this blog post we introduce an alternative approach to supplemental instruction that draws from recent advances on participatory and networked approaches to online learning.

Higher education in increasingly

concerned with student success in gateway introductory courses. Traditionally,

students who do not pass a screening test (often provided by a commercial

publisher) are placed into remedial Developmental Education (DE) courses which

do not generate credits. Students who pass those courses (often by meeting

criterion on the placement test) are then allowed to enroll in the

corresponding for-credit courses.

Because college students

in the US can pay for up to one year's worth of remedial DE courses, they

remain popular with colleges. Increasingly, the DE placement test and

curriculum is provided by the same publisher. And increasingly the curriculum

consists of automated drill and practice computer programs. This makes it

simple for colleges to set up courses. They still collect tuition, but only

need to hire somebody to monitor the students and pay a small fraction of the

tuition to the publisher for each student in the class. This is what Austin Community College did when they converted a shopping mall into a

remedial math center using ALEKS from McGraw-Hill. This effort was widely

reported as a success because it cut the dropout rate from the developmental

courses in half. Some schools are going even cheaper, like the University of Maryland System, who simply give “developmental” students

access to Pearson's MathXL

system. They also

reported increased completion rates for the developmental math courses.

So what’s the problem?

The problem is that DE

courses have long been shown to be ineffective. While Austin's project should

indeed be celebrated for increasing completion rates, the only statistic that

really matters is whether those students then enroll in and succeed in for-credit math courses. Decades of research suggest

they will not.

The recent Bridge

to Nowhere report from Complete College America summarizes this evidence for

the entire range of developmental approaches. We contend that this reliance on

commercial "integrated" systems will only make the matter worse. Because

they focus on the narrow representation of content on their own tests (and

possibly even the actual test items), it a simple matter to boost scores on a

targeted test. This is particularly the case with multiple choice items that

are necessary for computerized systems to work. Cognitive scientists have

proven that the threshold for recognizing

whether someone has seen something before is extremely shallow. This means that

even a brief exposure to something leaves someone capable of recognizing

whether they have seen something before. Just a bit more is needed to recall of

that thing was a correct or incorrect association. So of course scores go up on

the placement test. But this does little to prepare them to actually succeed in

the subsequent class. It may not even impact performance on a non-targeted

tests.

Unfortunately, the

consequences of these courses can only be uncovered by carefully designed

studies. Fortunately some scholars and centers have dedicated themselves to

doing so. In particular, researchers like Judith Scott-Clayton at the Community College Research

Center at Columbia University have

looked very carefully at the both the placement process and the impact of DE

courses on success in future courses. And the results are terrible. This evidence was recently compiled in the Bridge to Nowhere report from Complete College America discussed earlier.

Before discussion of our

recent pilot of an alternative, we want to raise a particular concern regarding

the impact of the automated self-paced systems discussed above. These systems are

dramatically less costly than conventional courses and textbooks. Once a

publisher sets up the web-site the marginal cost of each additional student is

very close to zero. And these systems support "anytime/anywhere"

learning that students are presumed to crave. For the reasons summarized above,

we suspect that these systems are likely to result in larger gains on placement tests than conventional DE classes but lower success on subsequent courses than

conventional DE courses.

Our concerns were

bolstered by studies of Pearson's MyFoundationsLab led by

Rebecca Griffiths at Ithaka Research with the support of the Gates Foundation. The team at Ithaka

started with students in Maryland who did not pass the math placement test for

five schools in the University of Maryland System. In contrast to the many case

studies published at Person's website, the team at Ithaka randomly assigned the

students who volunteered to participate in the study to use or not use

MyFoundationsLab math software. This is very

difficult to do in many school settings because it means withholding

potentially useful education from the control students (who in this case were

encouraged to access static developmental materials from a website and then

retake the placement test). Across multiple sites, the students in the three treatment

groups were more likely to retake the placement test and had statistically

larger gains when they did. While all three treatment groups were slightly more

likely to enroll in and pass a subsequent mathematics course, none of these

differences were statistically significant. Thus, they concluded that while

this online alternative was dramatically less expensive other alternatives, it

had no significant impact on enrollment or success in gateway math courses.

Supplemental Instruction as an Alternative to DE

Concerns with the

effectiveness of DE have led to many efforts have moved forward to provide

supplemental instruction (SI) in the form of summer bridge programs, tutoring

centers, and recitation sessions. SI has been substantially researched and in

general is correlated with improved performance in courses (see, for example,

David Arrendale’s annotated bibliography of SI studies).

However, the story

becomes complicated when (a) SI is compared with other possible investments of

those resources and (b) when considering online learners and new digital

technologies. Empirical findings on online SI have been mixed. For example, a study at the University of Melbourne revealed that the

affordances of online SI were balanced with technical and social constraints.

Other approaches to online developmental education have also followed more

drill-and-practice approaches to SI.

With these problems in

mind, we sought to rethink how online environments could better facilitate

supplemental instruction. In this pilot work, our team was concerned with

secondary access to disciplinary content (in this case, calculus) - something

that most SI is interested in. Instead of the decontextualization

present in many drill-and-practice approaches, we wanted to foster recontextualization,

that is, we wanted to support learners in making the mathematical content

relevant to their own lives in a way that it was not during regular class time.

Even in successful peer tutoring approaches, content is negotiated and

presented from the expert’s perspective. Following Engle’s notion of productive

disciplinary engagement, we sought to problematize the content from the learners’

perspective, and consequently support learners in the meaning-making

necessary to parse complex concepts in calculus.

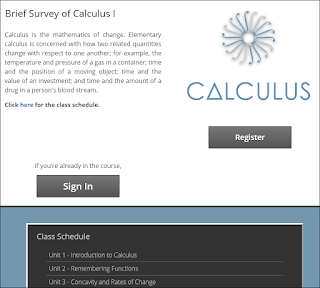

This fall, our team gave

learners in an online section of Applied Calculus the option to try a handful

of new modules in our Google Course Builder platform. By default, students read

an interactive webpage (which includes videos) and try a 20-question online

homework set. They had the option to try the first three units in our platform.

For each module they completed a “wikifolio” in which they connected their

previous math experiences and their interests (in this case, their major) with

specific principles and content in calculus. The same webpage and homework set

that were embedded into these wikifolios, so that when learners engaged with

them they had already begun making important connections.

This making of

connections is really important in our work. Our approach assumes that productive

engagement with calculus deeply connects the course content to each learner's’

prior experience, current interest, and future goals. Our approach further

assumes that students should do this publicly where they can interact and

connect with peers, before getting opportunities to privately practice using

their new knowledge on challenging problems. This concern with engaged

participation with course knowledge is why we call this approach. Participatory

Learning and Assessment (PLA) and call refer to these modules as Calculus

PLAnets (Participatory Learning and Assessment Networks).

This making of

connections is really important in our work. Our approach assumes that productive

engagement with calculus deeply connects the course content to each

learner's’ prior experience, current interest, and future goals. Our approach

further assumes that students should do this publicly where they can

interact and connect with peers, before getting opportunities to privately practice

using their new knowledge on challenging problems. This concern with engaged

participation with course knowledge is why we call this approach Participatory

Learning and Assessment (PLA) and call refer to these modules as Calculus

PLAnets (Participatory Learning and Assessment Networks).

What is a “Wikifolio?”

What is a “Wikifolio?”

Wikifolios are

stand-alone editable documents that contain guidelines, open educational

resources, edit windows, and private practice problems. In edit mode, the

learners can enter their responses to each set of instructions using a

standards WYSIWYG editor. As shown below, by clicking the gray bars, the

instructions for each section can be revealed (top) or hidden (bottom).

Learners can save their drafts and publish them so their peers and instructor

can look at them and discuss them.

The first wikifolio

introduced learners to calculus, the wikifolio structure, and the online

problem practice platform (ExamScram). After the overview of the wikifolio,

learners introduce themselves to their classmates and discuss their previous

experience with math. Below is real example from one of the students (a fairly

typical student) after anonymizing it. Importantly, students are encouraged to

examine the wikifolios of their classmates, particularly if they are having

trouble.

Next, learners watched two brief online videos that explain calculus generally (you can see them here and here). These videos were selected very carefully to not overwhelm students and help them connect the disciplinary knowledge of calculus with their own lives. They are then instructed to write a few sentences about whatever connections they can make.

Learners are then

instructed to search them web to find a resource that makes additional

connections between calculus and their primary discipline.

Then, learners were

directed to set up their ExamScram accounts and then look at their peers’

wikifolios, comparing their connections and getting to know one another.

A Typical Calculus

PLAnet Wikifolio

In a typical wikifolio,

learners first learn the general idea of the unit topic, usually through a

short webpage or video. Then, they can talk about initial connections as well

as previous experiences with the topic. The third wikifolio discusses rates of

change. For this post we will show an example from a different student, a

marketing major.

Then, they “Engage and

Apply” their ideas through five activities. First, they visit the interactive

webpage at ExamScram.

Then, students try five

basic problems/equations. They rank those equations in terms of how much

they helped the ideas “click.” They also have a better sense of how much they

understood the ExamScram content. This simple routine is a key innovation in

PLA. The act of ranking for relevance and justifying that ranking is a simple

way of engaging students with the content and with each other.

We think that this informal process of recognizing the affordances of different equations is a more effective alternative that what typical students would be doing at this point: comparing the different answers to multiple-choice practice items. Because it is done in public, students who are struggling (or even just unambitious) can look at the work of more experience and/or ambitious peers for insights. Because peers are responding to the same prompt from a different perspective we expect this knowledge to be easily comprehended,

Once they’ve tried the problems, they read/watch 2-3 other open educational resources that cover the same topic. They then rank those resources in terms of how useful they were, with respect to a learner’s major or the issues they encountered in the last activity.

Once they’ve tried the problems, they read/watch 2-3 other open educational resources that cover the same topic. They then rank those resources in terms of how useful they were, with respect to a learner’s major or the issues they encountered in the last activity.

Again, this simple rank & justify activity is an simple variation of the same more general engagement strategy. This is where our situated learning theory points us to a very different way of using practices problems than in a "flipped classroom" where instructors or expects select the videos that they think are ideal for their students. Plus students don't need to spend a bunch of time learning how the instructor wants them to engage--they do the same thing every time.

After they have engaged

with those videos in order to rank them (and presumably learned more), learners return to ExamScram where they practice using that new knowledge to complete a

problem set.

These problems are

automatically graded. They also take place privately, so a student’s

performance on these problems unlike the rest of the wikifolio is not

made available to their peers.

One of our design principles illustrated here is that curriculum with "known answer" questions should take place in private. This is because that peculiar way of representing the knowledge of a domain does not lend itself to productive disciplinary engagement.

They write a little bit about their updated understandings of the connection between the week’s topic and their major.

They write a little bit about their updated understandings of the connection between the week’s topic and their major.

At the heart of this

approach is interaction with peers. The interactions can be quite rich, usually

involving discussion about the learner’s focus discipline. Students are instructed (but not required to comment on their peer's work. The instructor's role in these contexts is encouraging students, acknowledging good examples (Tristan worked as the instructor and was a TA in the course).

This context also provide an

opportunity for the instructor to bring up new points and link to outside

resources.

This has been really helpful in other PLA setting. What we realized was that the instructor can insert more advanced ideas and resources that might overwhelm the less experienced learners if inserted in the assignment itself. Importantly, the

comments happen directly at the bottom of a learner’s wikifolio. We know from our prior research that this means that over 90% of the comments reference to topic of the assignment; most of the rest reference the topics of the courses. We also have found that from one quarter to one half of the comments reference a context of practice of either the wikifolio author or the person posting the comment. This huge for us because we think that declarative knowledge of the discipline must be linked to a meaningful context of use for it to be useful beyond typical assessments.

Finally, learners

reflect in three ways. They talk about the intersection of their discipline and

math. Then they talk about who they worked with. Lastly, they discuss the big

take-aways of the unit.

These reflection prompts have been refined extensively. The first one is very specific to the learners own context. The second one is an informal assessment of collaboration--try to get yourself recognized by your peers for being helpful. The third one builds on Melissa Gresalfi's notions of consequential engagement. It is more general. We have learned over the years that students can't really draft coherent reflections like this without having engaged meaningfully with the assignment. In this way the reflections serve as a summative assessment of engagement while also serving as a formative assessment of individual understanding.

What’s next?

What’s next?

Even in this short

pilot, it is clear that learners can find meaningful intersections between

their interests and calculus. Furthermore, we believe that contextualizing

practice problems with meaningful connections and peer interaction is going to

significantly improve students’ scores on traditional tests.

This pilot has revealed

that Participatory Learning and Assessment in Mathematics is no doubt possible.

We look forward to expanding this work to scale. We are also starting to search for other STEM courses to do similar pilots. This work was funded by an IU Faculty Resubmission Support Program grant. We almost got funded by the NSF to do this work but they wanted prototypes. This is our first one and with another one in in another STEM domain and a bit more evidence we are confident we will get the funds needed to iteratively refine these modules, make them freely available as open educational resources, and study their effectiveness in experimental studies.

No comments:

Post a Comment